Cyber Threat Intelligence Sharing

Table of Contents

1. Lecture 06

- Class: Malware Analysis and Incident Forencsis

- Topic: Threat Intelligence

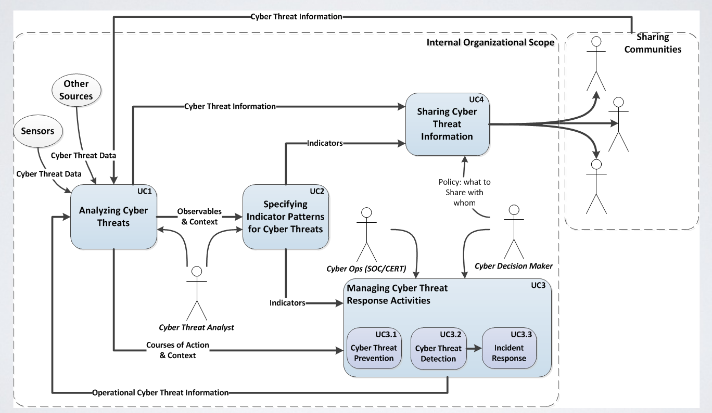

Through threat intelligence process we can distill intelligence bits, that can be used to better protect the organization. On eimportant element in threat intelligence is the collection of information. It’s possible to gather information from all the sensors deployed inside the network and the hosts. Another surce of information are historical data of detected attack. Data can also come from Open Source feeds, or sources that are not free.

2. Sharing Intelligence

During the last ten years we witnessed a growth in the communnity of threat intelligenc esharing community. The fact is that the adversary is not targeting only a company, so his also acting against similar organizations or he can be a competitor.

If there are means to share intelligence info amongs varius comapnies they will be able to find the better response possible and to build the attakcer profile.

The goal of sharing intelligence is to share cyber threat intelligence, vulnerabilities, configurations, best practices and knowledge and tips to a larger external community enhancing the cybersecurity of an individual as well as that of the entire community.

For example a lot of company working in the same business sector share intelligence freely among them because they all are target of similar adversaries. Banks started to sign agreements where they stated that they will share information about newly discovered vulnerabilities.

In italy we have the CERT Fin (Financiary CERT) that has the puprose to propone a community response to attacks, performs trials and tests, and much more; acting as a mediator for the information sharing.

Building on top of more information is better that building defenses on top of less information. An information sharing community is the best way to acquire quality data. As today a lot of effort is coming from national entity to enforce sharing between companies of the same sector.

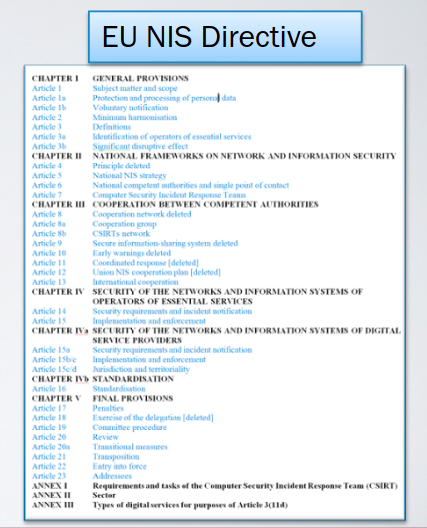

Often information shared comes with a lot of technical details, so that information can be used by competitors to have a competitive advatage. For this reason in sharing information there is a risk, for this reason the EU pushed the evolution of this process through some regulations. The strongest push was done by the NIS Directive.

2.1. NIS

The NIS directive was meant to regulate how Critical Infrastructure must implement Cybersecurity. Among all of those indication there is also the imposition of the creation of internal process capable of producing and sharing threat intelligence information. The NIS directive delegates the application of those rules to the individual EU nations, but it defines the economic sector:

- Energy distributors

- Water distribution

- Financial sector

- Healtcare

In Italy for example the list of this company is secret, but there are obvious names: large telco providers and energy providers.

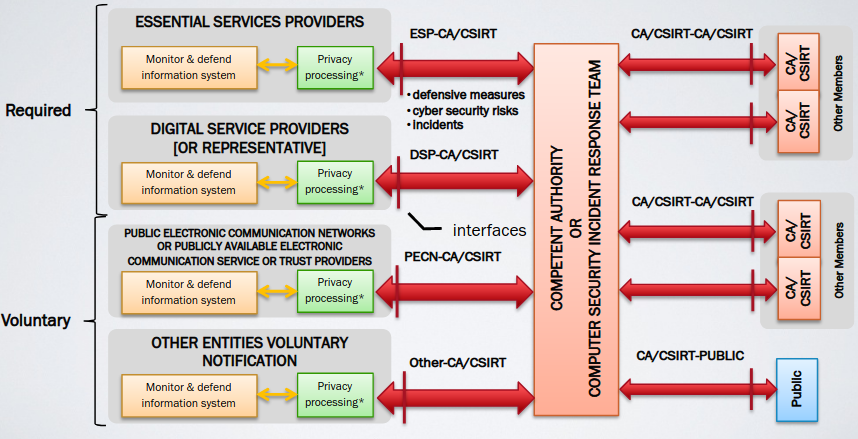

The imposition of CIT Sharing gave birth to the birth of different companies that provide this kind of service. The regulation also says to which organization the information has to be shared. A third party is required to ensure that any confidential information of each single actor remains secret.

3. Sharing Models

First and foremost there are a couple of sharing models that are widely adopted. The first one is the so called Hub & Spoke model; or Centralized Model.

3.1. Hub & Spoke

It is composed by a set of organizations that share data to a central node (a third party), that is responsible of handling data and anonymizing it. This is the most common model. Most of the time the third party is a national istitute or a private organization that is a somewhat trusted by all the other members.

The advantages of adopting a central approach is the fact that the central point is capable to enrich in the better way the available data, correlating them. Of course the central position enables the possibility to perform Macro trend analysis, Rule agreeement enforcement, and represent a low techical cost for organizations that want to be part of the sharing community; for example providing pre-configured software.

The problem is that it requires a wide-scale trust for the central organization: all the participant must trust fully the central actor.

3.2. Peer to Peer

This model is composed by an agreement between two organizations, both parties decide wich kind of informations to shares and the level of details. It is tailored for efficiency and speed and both parties may trust each other, enabling the exchange of detailed information. This sharing model requires to have more sophisticated and matured cyber threat sharing operations, and of course this model is very hard to scale, so it is done with an handful number of organizations.

3.3. Use cases

The Centralized model is preferred any time that there is an information sharing process that derives from the imposition of a regulation; and it’s done between a huge amount of different actors.

The peer to peer sharing model is adopted in large suplly chains, so between companies that collaborate to build a single product. That’s because the supply chain itself may be the target.

4. Sharing Technologies

The technologois used to set up those processes are different. We will consider two of them, that take into account the two most common problems of information sharing:

- Information Languages. It is important to find an agreement on the meaning of the bits of information; sometimes the information is ingested by an automatic system (threat information platform), and of course by humans. There are several standards proposed: STIX (currently the de facto standard), MAEC, and others.

- Sharing Protocols. The idea is that there is the need to set up protocols to choose how to share information, which is the right message to convey a piece of information. Some protocols are TAXII (de facto standard), and *RID.

Other tools widely used are vocabularies and taxonomies.

4.1. STIX

Structured Threat Infromation Expression is a language and a serialization format used to exchange cyber threat intelligence. It solve two kind of subproblems: a sintatic problem, and a semantic one. The first is easy to solve, the second is much more difficoult to asses.

In the alst five year beacame a global standard supported by OASIS. STIX has two versions, STIX 2.0 is the one used today.

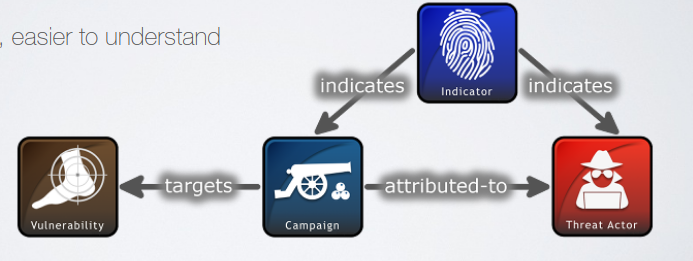

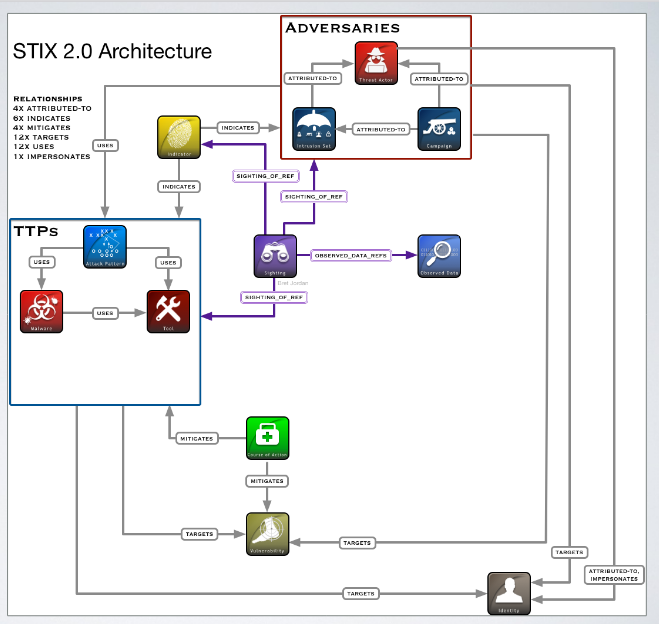

In STIX the ifnromations are represented in a graph-based model, both vertices and relationships have their own properties. In particular vertices are called STIX domain objects, different objects can be populated with specific properties, and connected via relationships. The good point to have a good graph model can be easily modified to alt the information that it contains.

In STIX is easier to add information to make possible the evolution of the Cyber Threat information cycle and enrichment of the informaiton.

Another good aspect object is that it is completely tool-agnostic, it can be coded in a standard relational database, graph database or an ad-hoc platform; allowing the creation or the adaptation of different platforms.

The STIX define the model and provide information on how to implement the model (JSON) ensuring interoperatibility.

The obvious use case of STIX is to share threat intelligence information. The cyber decision makers are typically managers of the Chief Security Office. The data inside the Cyber Threat Cycle may be represented using STIX.

Other use-cases are threat response activities, and how to set up and incident response process. In principle it’s possible to set up an internal Threat Intelligence process that uses STIX in all of its parts.

In the graph based model there are Domain Objects and Relationships. In particular twelve domain objects are defined:

- Attack Pattern. High level information about TTPs, describing ways threat actors attempt to compromise a system.

- Campaign. It describes how an actor behaves in relation to different targets.

- Course Of Action. It describes the set of actions to be taken to prevent an attack or respond to it.

- Identity. Individuals, organizations or groups.

- Indicator. It describes an idicator of compromise.

- Intrusion Set.

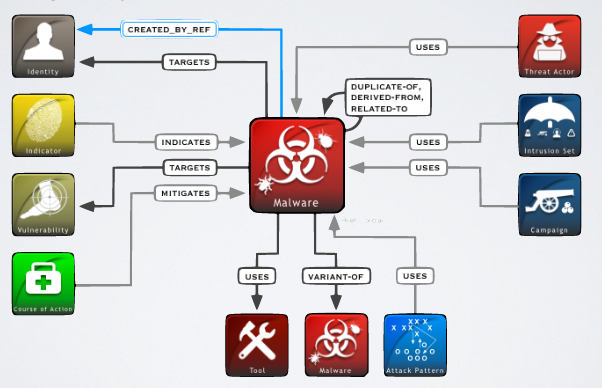

- Malware.

- Tool. It can be a piece of sotware that the attacker may use against an organizations. The difference between tool and malware is that tool is not a malicious software.

- Observed Data. Used to represent raw data collected, that is not necessarly correlated to an IoC.

- Report. Collecitons of other elements used for reporting purposes.

- Threat Actor.

- Vulnerability

STIX also defines high level relationships that can be used to link different objects. The property f the relationship describes its meaning. Another kind of relationship is Sightin, that denotes the belief that an element of CTI was seen.

The Sightin meta relations creates a relation between an actor, a TTP and the indicators of compromises togheter with the observed data.

The idea of the meta model is to provide a guide on how to relate different objects and map the data acquired on the mdoel itself.

STIX also allows to focus analysis on specific elements, for example the instance of a malware:

this kind of focus can also be used to perform a sort of gap analysis highlighting missing information.

4.1.1. JSON Representation

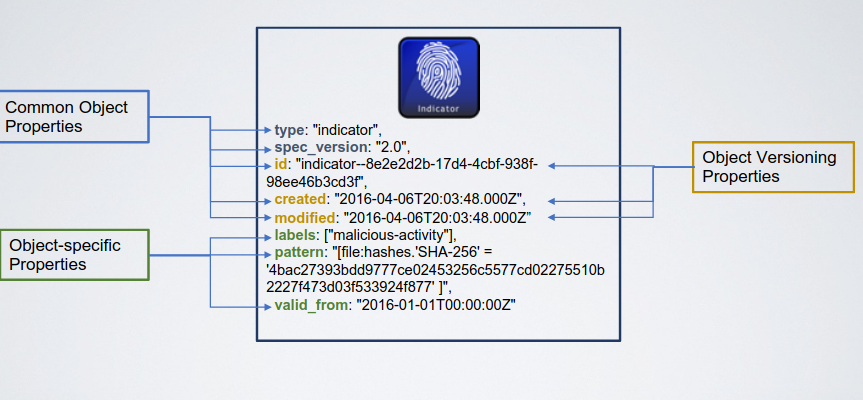

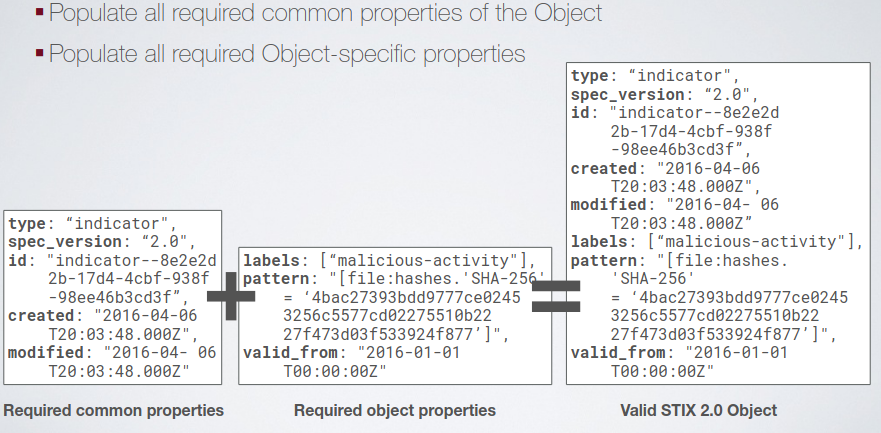

The STIX standard defines, for each object, a set of common properties, and object specific properties. Notice that the common object properties represnet basic information (type, version, id, timestamp of editing, timestamp of creation).

Among the properties common to all objects, som eof them are compulsory, like the one cited, others are optionals, and may be specified object by object. The model is also open to additions. Object identifiers are compulsory elements (UUID 4.0), because they are used to create direct references between objects.

There are some kind of relationships defined per kind of object, and others that are common for different objects:

- derived-from

- duplicates-of

- related-to

4.1.2. Versioning

COnsidering that information is continuosly updated by the intelligence cycle make sense that also the objects represented in the STIX model evolves over time. The fact is that in STIX rarely an object is delated, insted it is updated with a revoked property. The fact that an object is revoked blocks the creation of new relations from/to that object, and it can’t be updated anymore.

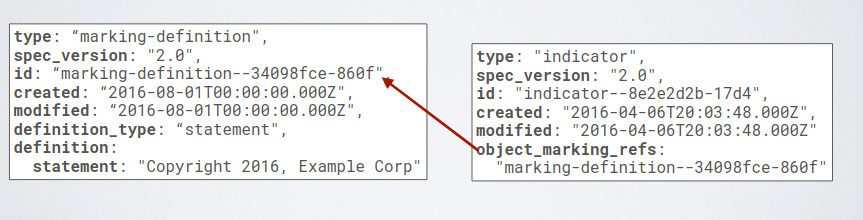

4.1.3. Data Markings

They represent specific properties that represent how a specific piece of information should be used (i.e. level of confidentiality). Two differnet kinds of markings can be created: object or granular. The object applies to a full STIX object, sometimes however for specific objects just some of the object properties will be redacted. For this purpose granular markings are defined.

Data Markings could be Statement Markings or Traffic Light Protocol.

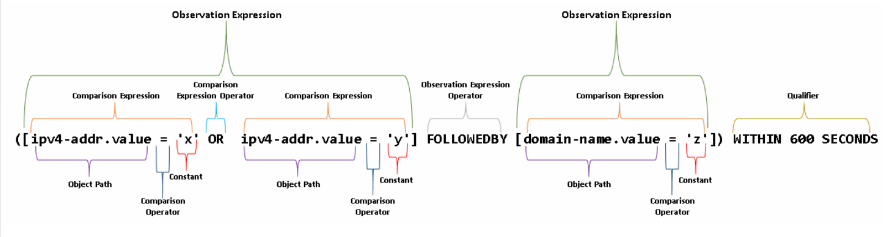

4.1.4. Patterns

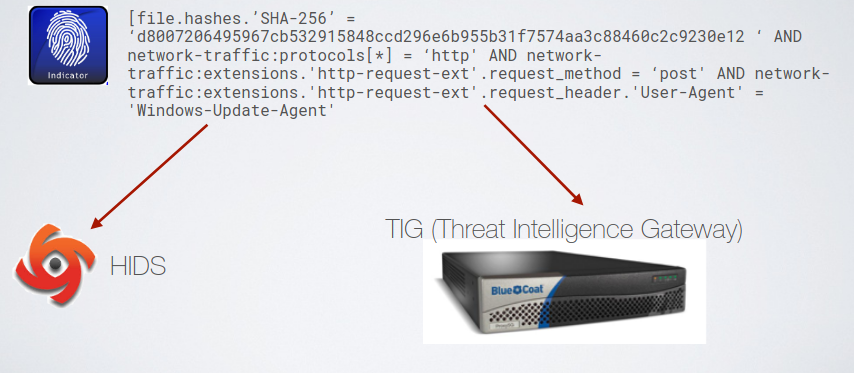

Patterns are useful to query the knowledge base to extract a subset of the graph. In STIX 2.0 patterns are represented by a separate language with its own grammar and is used in the Indicator Domain Object. The advantage of this approach is that it reason on the whole graph, combining elements related to different indicators; there is no other approach that allows to query such set of etherogeneus elements.

Specific operators defines specific events that should be matched. Patterns may need to be converted to native query syntax if the security tools does not support STIX patterning natively.

The pattern may contains information that can be monitored by different appliances, allowing sub patterns related to different monitor devices. The solution to this problem is to go through a SIEM (Spognardi One Love), such that is up to the SIEM to identify if that rule is matched.

4.2. TAXII

After the information are modeled, there’s the need for a way to exchange those informations between organizations. For this reason a portocol is needed, in the end a lot of companies will collaborate so the same method of information sharing is needed.

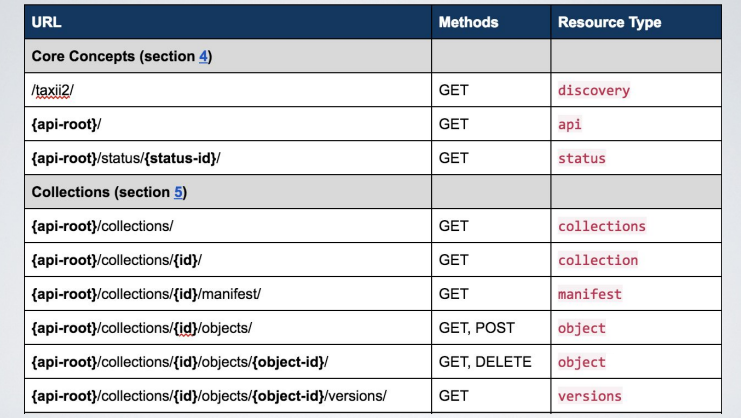

TAXII is managed by OASIS and was born as part of STIX, it stands for Trusted Automated Exchange of Intelligence Information. It defines a set of containers, that can be used to share different treath information, with different models. The idea is that all the impleentation should be inter-operable given the API and the message syntax. It uses JSON as a standard format messagge content interoperability, and TLS 1.2 or higher.

It defines two kinds of primary services: collections and channles, they represent common information sharing interfaces.

4.2.1. A collection

It is an interface open to a logical repository of CTI objects (they can be STIX objects), the collection makes this objects available to clients via a standard set of interfaces, the interface is a standard client response infrastructure. This approach is useful if the repository is considered as a datasource of intelligence information. This approach is not perfectly fit for all the uses cases of CTI: a push like service may be more useful.

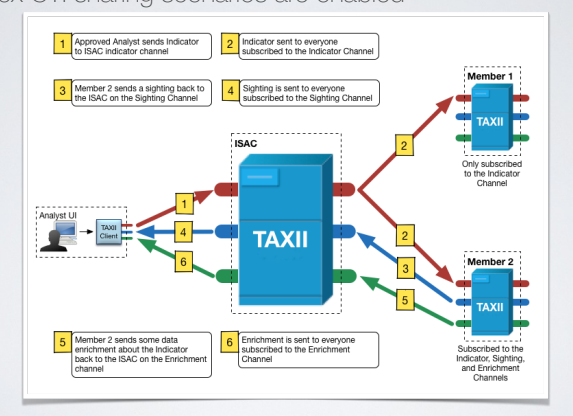

4.2.2. A Channel

It is the TAXII standard for the known publisher subscribe infrastructure, some parts of the party are considered producers, so they inject information in the channel, and the channel has a set of subscribed partecipants, that will receive immediatly the newly pushed informaiton. The channel is an abstraction that can be used to model different kinds of interaction, for example for specific sub-communities, specific kinds of information (low level IoC, Malware IoC, TTPs, and orthogonal point of view)

4.2.3. Combined models

With this model its possible to implement several complex sharing scenario.

In this case the ISAC will became the hub of the CTI community, and varius participants to that ISAC will the spoke that produce and receive the information. It is very common to have an implentation of TAXII that uses both channel and collection models in case of communication between two different organizations with well defined trust boundaries. Not everything that is internally shared should be shared externally, the nice point is that each company can implement the best way to communicate.

4.3. API

The interface is build on top of a standard RESTful API.

5. Taxonomies

The third point of the CTI sharing, manage the need to describe the structure in which represent the objects. The information should not be ambiguos in both semantic and syntactic way. STIX does not provide any solution to this problem, but hte community recognized that there is no signale way to solve it, but some taxonomies are used when modelling information related to threats and incidents.

It is important to reduce the amount of free text, and to model the content of the properties to limited textual elements, contained in a well accepted vocubulary and taxonomy.

In the end the cyber killchain is also a vocubalary for the varius stages of the attack, but starting from that concept a lot of other vocabularies was made.

5.1. Attack patterns

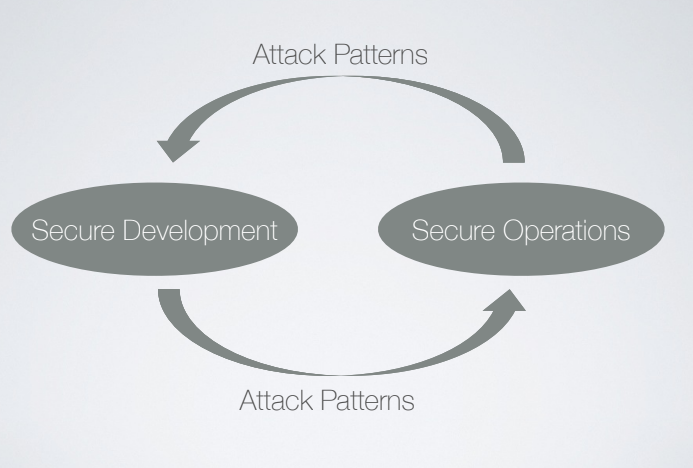

If you think about the Cyber Killchain, an attack pattern may represent in general all the first part of the killchain, once the attacker is inside of the system, all the penetration phase end. There are varius strategies than an attacker can use to take control of a system, so some organizations build a collection of those strategies to help other at identifying what attack is in place or to build secure software.

An attack pattern capture the attacker’s perspective to aid software developers acquires and operators in improving the assurance profile of their systems.

5.1.1. Leveraging Attack Patterns in SDLC.

In the security context there’s is the concept of building a system secure by design, so the security has to be part of the design of the application itself: patching a system a-posteriori is more expensive than create it secure from the beginning. Also some errors can’t be patched. So the idea is that attack patterns may help people that build software, considering the possible ways of how an attacker may attack their software in the future, to avoid that those patternes will be leveraged in the future.

So it help guide the contextualization of the possible usage of vulnerabilities, and also, even when an application goes in production, periodical assesment must be done to ensure that the application, and related monitoring services, are robust enough. That assessment is guided by attack patterns.

SecOPS Knowledge offers uniwue value to SecDev

5.1.2. CAPEC

MITRE CAPEC is a taxonomy of attack patterns.

5.1.3. ATT&CK

The MITRE ATT&CK taxonomy focuses on the second par tof the killchain, it typically describes everything that happens after the installation phase. It is organised in phases, that maps the cyber threat killchain. It consists of tacic phases and lists a lot of techniques available to adversaries for each phase. A mix of the CAPEC and ATT&CK is the best way to conduct a good analysis.

When sharing intelligence with another organization it is better to make a direct rference to the elements and the taxonomy used.

5.2. MAEC

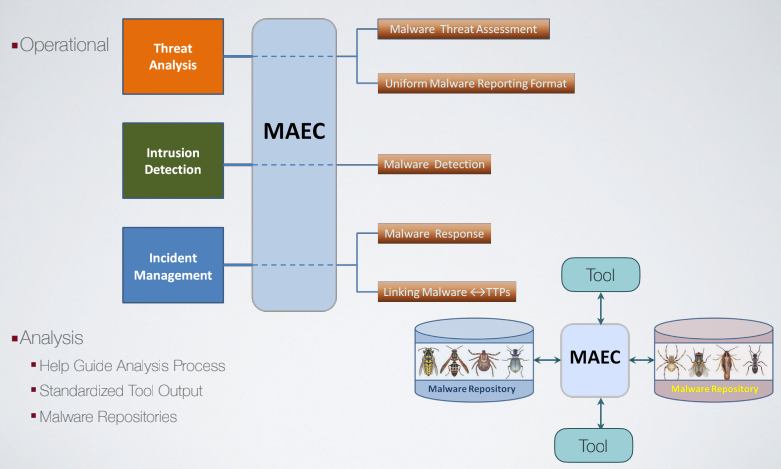

MAEC is a language for describing threat intelligene, and was developed in parallel with STIX. It is a language used for sharing structured information about malware. It includes a schema, enumerations (set of vocabulary), and a bundle. It was not born to share information with third parties, bu to provide support to team of malware analysis, so it is supposed to be more an operative tool. The use cases for it are:

MAEC should be at the center stage insiede a process of malware analysis, and be used to describe the content of a malware repository, and interact with tools to enrich that knowledge base.

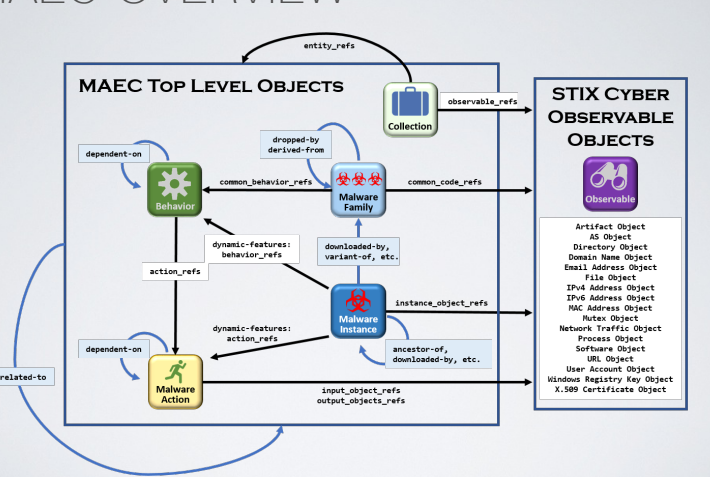

MAEC defines some top-levels objects, that are somewhat similar to the domaim object provided by STIX, and they are strictly related to the malware environment, MAEC Types are similar to variable types in PL, between TLOs there are relationships, then there is the package that represent the MAEC standard output format. Common data types are the common data types used in TLOs, differently to MAEC Types are more complex pieces of data used to represent info coming from different standards.

The behaviors are the ways in which the malware interacts with the system, for example a family of malware can share a common behavior. The action is the impact of the malware on the system. THe behavior represent the intentions, the action how really it interacts with it.

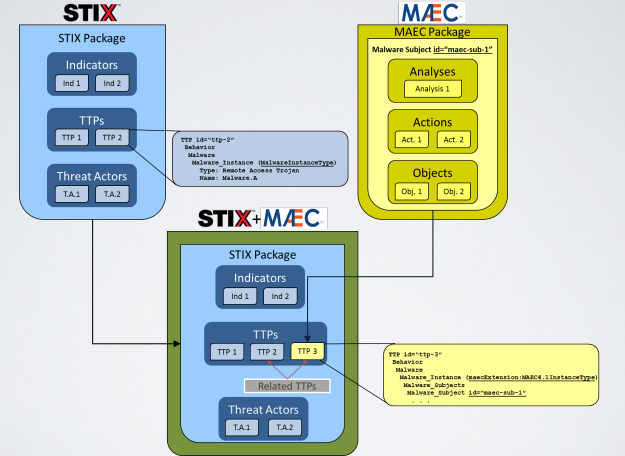

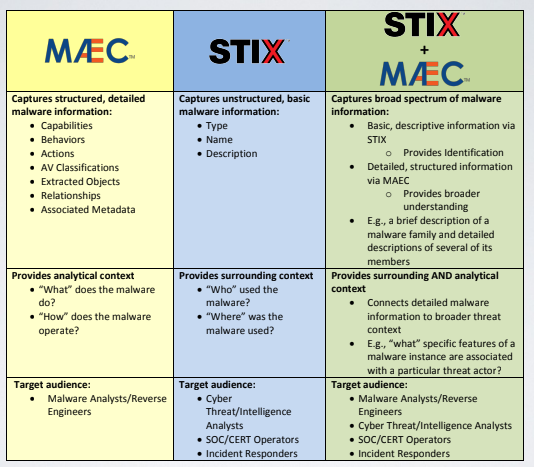

5.2.1. MAEC and STIX

MEAC is a domain language built for malware analysts, on the other hand STIX can capture unstructured basic malware information. However MAEC lacks the possibility of an higher level vision of the context in which the model is used.

Combining STIX and MAEC is the best choiche to build a good contextualized and detailed database of information. For this reason STIX provides a way to embed MAEC data structures natively.