Measuring Security Metrics

Table of Contents

1. Lecture 21

- Class: Security Governance

- Topic: Security Metrics

- Reference: M. Pendleton, R. Garcia-Lebron, J. Cho, S. Xu: A Survey on Systems Security Metrics.

We have to emphasize the importance of taking measures and the importance of defining suitable metrics for quantifying the aspects to measure. When talking abut Direct Control loop, as a basic principle behind a correct and efficient security governance process, we introduced the importance of defining metrics to quantify the benefits of the Direct process, during the control process. It’s necessary to measuring the goodness of the control loop.

2. Recap: What is Security?

A security attribute is an abstraction representing the basic properties or characteristics of an entity with respect to safeguarding information. - NIST SP 800-53

When we talk about security there are several things that we could consider, and in particular, those things must be captured by security attributes. An attribute is just one of the possible perspective over which we can measure the security, and is any aspect related to the protection of one of the security elements to consider.

Cybersecurity consists in the prevention of damage to, protection of, and restoration of computers, electronic communications systems, electronic communications services, wire communication and electronic communication (including information contained therein) to ensure its availability, integrity, authentication, confidentiality and non-repudiation. - NIST SP 800-37

Here we have the elicitation of the most important security requirements to consider, and that as some point we have to measure and quantify. Given that there are some degrees of preserving them, we need something involved to quantify this aspects; for this reason we have to formalize security as the capability to preserve those attributes.

We divide security attributes in two classes: primary represented by the CIA properties; in this case:

- Confidentiality means preserving the system from an unauthorized disclosure of information. From this point of view, it is no a binary attribute, but are several degree of confidentiality that we can guarantee. There is a difference in terms of violation of confidentiality.

- Integrity means the absence of system alteration, so we have to preserve system integrity. Even in this case we have to define a measure of violation of integrity.

- Availability, even in this case there could be difference services, so the availability could be impacted not globally but partially.

The are also secondary attributes: Accountability, Authenticity, and non-repudiability; they are secondary because they make sense only when the primary attributes are preserved. So they are taken into account in a second level of analysis, combined with the primary security attributes.

- Accountability, means that the availability and the integrity of the identity of the person who performed an operation is preserved. It is a consequence of the fact that availability and integrity are preserved.

- Authenticity refers to he integrity of a message content and origin. So it deals with the capability of the system to preserve the correct nature of the events.

- Non-repudiability. It deals with the capability of the system, to correctly link the source of the action to the action, looking for the low level information: IP address of the sender.

3. RECAP: Security Management as a Continuous Process

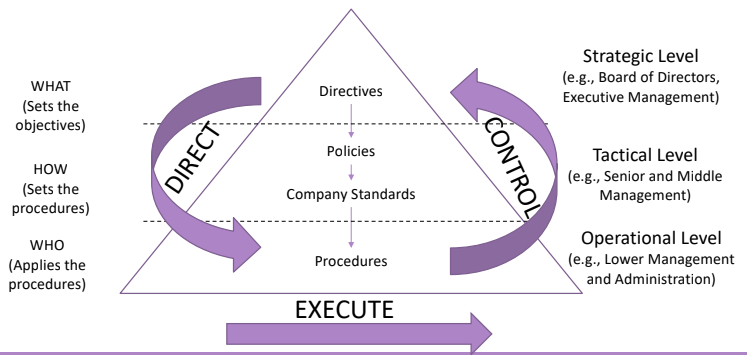

From the point of view of the organization this is a loop process that involves all the three layers of the pyramid, and starts from the strategic level, moves to the tactical level that output actions to be taken by the operational level that translates them into procedure.

The control process starts from the operational level, here layer by layer we verify that the objective that was stated was achieved, completely of partially. We have define compliance clauses that we can use to measure the benefit of the directions taken.

“In physical science the first essential step in the direction of learning any subject is to find principles of numerical reckoning and practicable methods for measuring some quality connected with it. I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the state of Science, whatever the matter may be.” - Lord Kelvin

Here Lord Kelvin stress the importance of measurements in any domains. Cybersecurity is a science, so if we want to understand what’s going on we have to define and quantify variables. Knowledge pass through the measurements.

You can’t manage what you cannot measure. So the effectiveness of the management is related to the ability to take measures, interpret the message behind the measurements and take actions.

4. Security Metrics

Depending on the analysis task, there exists plenty of security metrics, there is no one metrics that fit all the needs.

For example if we want to validate the goodness of a direct control loop, the risk metrics is usually used: the actions performed reduced risks? If yes the actions performed were effective. At the same way to measure system exposure, vulnerability-based metrics are usually used.

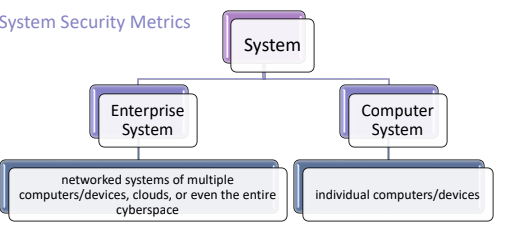

We’ll review how to define a System Security Metrics, there exists two different definition of system: enterprise and computer systems.

4.1. Enterprise System

The Enterprise System is basically the set of all the interconnected devices, and the way in which they are connected (largest perspective). It is the whole system that support the enterprise in its objectives.

4.2. Computer System

It is a subset of the system, with an focus on a particular host, or small networks.

4.3. General Notion of Security Metrics

When dealing with security metrics, there exists a general approach that tries to link the definition of the metrics, based on the notion of attacker and defender. Trying to capture the dependencies between what the attacker can do, and what the defender can do. From this point of view an attacker is an entity that represents the entry point of an attack (IP address, privilege over a specific machine, a person); the incident is the outcome of the attack.

We can represent the attacker-defender interaction under the enterprise system, or under the computer system

4.4. Attack-Defense interaction in an Enterprise System.

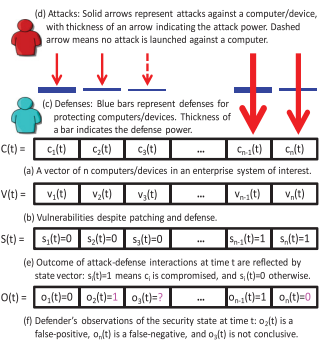

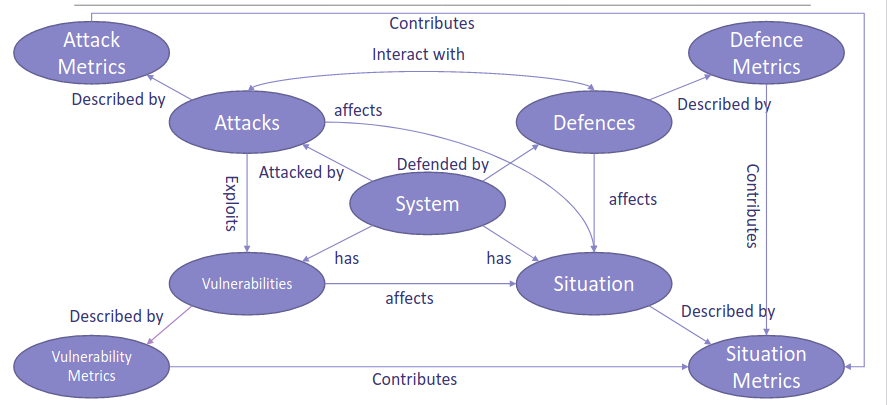

We can represent the Attack-Defense interaction with this figure:

The attacker has different means that He/She can use to attack the system, some of them are easy, some are more involved; also the attacker can use different attack vectors to get into the system.

Then there are the defenses, they are effective when considered together with an attack vector and an attack. We can try to model, using one or multiple metrics, or the attacker can get into the system, measure the vulnerabilities that allow the attacker to get in, and measure the probability of success of some attacks, and finally we need to collect the evidences that we can use to express numerically those concepts.

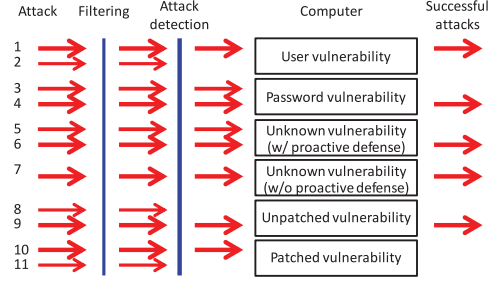

4.5. Attack-Defense interactions in a Computer

If we consider the attack-defense interaction considering a single device, we consider low level details, like filtering barriers around the hosts, attack detection mechanisms, and then the Host and only the successful vulnerabilities.

This perspective is not easy to measure, because all the aspect over which the vulnerabilities needs to be considered must be taken into account.

4.6. Situation Understanding

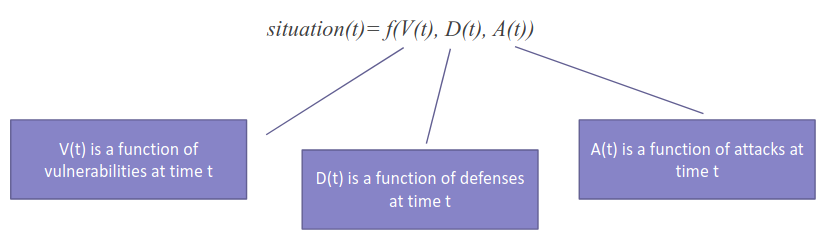

A situation metric is the aggregation of all the aspects that can describe a system on a given time. We have to quantify the presence of vulnerabilities, the capability (strength) of the attacker, and the capabilities of the company to defend itself.

The situation is nothing more than a generic combination of those three aspects: Vulnerabilities, Attack, and Defense.

The central point of the analysis is the system, from there we can identify all the possible related aspects:

No we can define metrics to quantify vulnerabilities, defenses, attack capabilities, and some for quantify the current situation.

5. Vulnerability Metrics

The authors of … collected from the existing literature all the possible metrics used in the domain of cybersecurity to quantify Vulnerability Metrics.

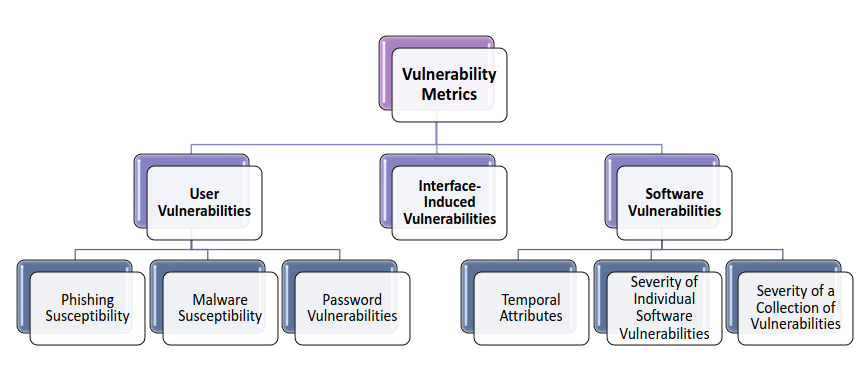

Vulnerability metrics can be decomposed in three main families: User Vulnerabilities, Interface Induced Vulnerabilities, Software Vulnerabilities.

5.1. User Vulnerabilities

There are three main types of metrics to consider: some quantify the susceptibility to phishing and malware, and other metric concerns Password Vulnerabilities. The first two metrics tries to highlight the user cognitive bias, the fact that the user’s decisions can be impacted by his own background and knowledge (this weakness is leveraged by Social Engineering attacks); on the last point, we consider problems deriving from a bad management, so we try to measure how the user learn security measures.

5.1.1. Phishing Susceptibility.

Phishing Susceptibility is commonly evaluated by trying to measure the number of false positive, and false negatives that the user is generating. The phishing susceptibility can be measured using a ratio scale. The two values are collected by making tests, generating several email campaigns, (some of them are real phishing campaign injected by the company itself, and others are real email); then the false positives, and false negatives are computed.

The accuracy of those metrics is not easy to evaluate, because there is the human bias problem, the fact that a person flags a mail as phishing may depends also on some physiological aspects. The environment under which we collect measurements is not neutral, so the data has to be used with caution.

Also in this case, is difficult to compare the results with a target value or to compare different campaigns.

5.1.2. Malware Susceptibility

Malware susceptibility is closely related to a user’s online behavior, users that installs many applications are more likely to be exposed to malware, it’s the same if the user visits many websites. Also in this case a ratio scale is used.

Most of the time users get infected by downloading files from the web without checking the safety of the source. Also here we try to measure the capability of the user to behave correctly, but when under testing the user will behave differently.

5.1.3. Password Vulnerabilities

Here we tries to measure the compliance of the user with the good practices: use complex enough passwords, do not share it and also change it frequently. For this reason we have to use methods to measure the complexity f the password. One of the most common metric used is entropy, it is the most intuitive metric to use when measuring the strength of a password:

- it is often estimated using heuristic rules, but

- offer a rough approximation of password weakness or strength, without tell which passwords are easier to crack than others.

Another metric to use is the Password Guessability: how easy is or an external user to guess your password. This metric is used because most people use mnemonic passwords composed by easily guessed information. This metric has two variants: statistical password guessability, and parameterized password guessability.

Still today, there is low attention on what information are posted on social media, some info can be used by an adversary to guess the password.

Password meter metric is often used when one registers or updates a password; a more intuitive metric is called password strength. This kind of password meter will also check if the user does some simple mistakes, like: reusing the same password, or making little to no changes to the new password.

5.2. Interface induced vulnerabilities

Here we try to measure something that’s between the user and the software, so we would like to evaluate how much the interface is robust with the respect to the user behavior.

The metric typically used is the attack surface, from this point of view we try to quantify the subset of resources that the attacker need to use in order to enter in one, or multiple, hosts.

We define a tuple of values that is possible to analyze:

\(\langle \sum_{m \in \mathcal{M}} R_{d,e} (m), \sum_{c \in \mathcal{C}} R_{d,e} (c), \sum_{i \in \mathcal{I}} R_{d,e} (i)\rangle\)

\(R_{d,e}\) represents the ration of the potential damage and effort related to:

- The set of attack methods \(\mathcal{M}\)

- the set of channels offered by the software \(\mathcal{C}\), and

- the set of data item of the software \(\mathcal{I}\).

The tuple is composed by: the set of attack techniques that the attacker may use, how much the attacks could be effective on the considered entry point. More input the software needs, more attack vectors the attacker could use.

5.3. Software Vulnerabilities

Considering software vulnerabilities, we have three different types of metrics that we can use:

- Temporal Attributes,

- Severity of individual software vulnerabilities, and

- Severity of a collection of vulnerabilities.

5.3.1. Temporal Attributes

Concerning temporal attributes, there are two aspects that we can quantify, the evolution of the vulnerability, and its lifetime. Typically when a vulnerability is disclosed there is a small period of time in which the attacker is able to exploit the vulnerability, but is not possible to defend against it, at the end, hopefully, the problem is completely solved or all the patched are not effective leaving the system vulnerable.

| Metric | Description |

|---|---|

| Historical | measure the degree that a system was vulnerable in the past |

| Historically exploited vulnerability | Consider how many exploit of a vulnerability were done in the past |

| Future vulnerabilities | measure the number of vulnerabilities that will be discovered during a future period of time. (It is the most difficult to measure) |

| Future exploited vulnerability | measure the number of vulnerabilities that will be exploited during a future period of time |

| Tendency to be exploited | measure the tendency that a vulnerability may be exploited, using info available in public repositories, or CERTs |

Concerning the vulnerability lifetime the metrics used may be:

| Type | Difficulty of Patching |

|---|---|

| Client-end vulnerabilities | Client-end vulnerabilities are not under your control, it’s only possible to suggest the client to patch the vulnerability. |

| Server-end vulnerabilities | Are usually more rapidly patched. |

| Cloud-end vulnerabilities | Here the patching process is rather slow. Also because it does not under the responsibility of the user of the cloud services, but it is under the control of the provider. |

5.3.2. Severity of individual software vulnerability.

From this point of view there was a huge effort made by the community in creating scores to measure the severity of vulnerabilities, and weaknesses; respectively the CVSS Score and the CWSS Score.

5.3.3. Severity of a collection of Vulnerabilities.

Now we consider a set of vulnerabilities, and compute a metric that measure the severity of their presence on the network, or a particular host. They are useful because attacks in the real world are performed in multiple steps and exploit multiple vulnerabilities.

There exists many different ways for computing measure, some examples are Attack Trees and Attack Graphs. We can try to quantify the average time of an attack, and its likelihood given an attack path computed over an attack graph.

There are two different approaches to consider: deterministic approach and probabilistic approach.

5.3.3.1. Deterministic Approach

It is composed by the set of metrics, that can be computed deterministically. For example we can compute some topology metrics, measuring how the topological properties of attack graphs affect network attacks:

- Depth metric

- Shortest path

- Existence, number, and lengths of attack paths metrics.

Another example of deterministic metrics are effort metrics. They try to capture the effort needed by the attacker to compromise the system given the vulnerabilities considered:

- Necessary defense: consider the minimum cut between a source and a destination.

- Effort to security failure: measure an attacker’s effort to reach its goal state.

- Weakest adversary: measure the minimum adversary capabilities required to achieve an attack goal.

- k-zero-day-safety: measures the number of zero day vulnerability for an attacker t compromise a target. If the network is dense, fewer vulnerabilities are needed.

The main drawback of the deterministic approach is that it assume that the presence of a vulnerability lead to a successful exploit. Many systems exploits depends on a specific time frame, and on other preconditions not considered in addition to the privileges and the presence of the vulnerability itself.

5.3.3.2. Probabilistic Approach

To overcome the problem of the deterministic approach we can use probabilities to keep into account the fact that an exploit can be successful or unsuccessful, refining all the metrics introduced by associating a probability of success to every exploit (Markov chains to attack paths).

We can design other metrics that extends the discussed ones, that leverages the CVSS score to define the likelihood of attack path, or balance the presence of defense tools installed on the network to reduce the likelihood of a successful exploit.

6. Defense Metrics

It is difficult to capture the essence of the attack and defense techniques, the metrics defined simply try to give an overview of the defense barriers.

They try to measure the capabilities of the organization to defense itself. They are partitioned into four main categories: strength of preventive defenses, strength of reactive defenses, strength of proactive defenses, and strength of overall defenses.

6.1. Preventive Defenses

We are trying to evaluate and quantify the strength of all the elements deployed that should protect the system a priori. Typically the main mechanisms evaluated in literature are: blacklisting, data execution prevention (how we manage inputs), and finally control flow integrity, that deals with the software that we execute inside the organization.

Of course this is not an exhausting list, but there are many other prevention techniques, the problem is that they do not have any evaluation criteria, and corresponding metric.

6.1.1. Blacklisting

Blacklist is a useful and lightweight defense mechanism. It is typically used for protecting the organization from unauthorized accesses, by blocking suspicious IP addresses.

A blacklisting mechanism is efficient when it reflects the current set of bad IP addresses that tries to interact to the company. Two metrics can be used:

- Reaction time: how much a new malicious IP address is blacklisted.

- Coverage: estimate the portion of blacklisted malicious players.

They looks to two orthogonal perspectives, but together they give a good measure.

6.1.2. DEP

For DEP there not exists a real metric to compute. The community measures DEP as the probability of being compromised by a certain attack \(A(t)\) over all possible classes of attacks.

6.1.3. Control Flow Integrity

In the research community three main metrics have been proposed: average indirect target reduction, average target size, and evasion resistance.

- Average indirect target reduction: explores the structure of the software, driven by different inputs to cover flow paths, knowing which bug are present.

- Average target size: it is the ratio between the size of the largest target, and the number of targets.

- Evasion resistance: it measures how much the system is resistant against evasion techniques (how much the algorithm is good against CFI)

6.2. Reactive Defenses

We want to evaluate the capabilities to identify anomalies, correctly classify them and trigger a fast reaction. We want to evaluate monitoring and detection mechanisms.

6.2.1. Monitoring

| Metric | Description |

|---|---|

| Coverage | measures the fraction of events detectable by a specific sensor deployment, reflecting the defender’s need in monitoring events. |

| Redundancy | estimates the amount of evidence provided by a given sensor. How the information that we extract contribute to acquire new information or are a different point of view of an already inspected part of the network |

| Confidence | measures how well-deployed sensors detect an event in the presence of compromised sensors. It gives an index on how much we can trust the information measured. False Negatives, and False Positives, and percentage of error are some metrics to use. |

| Cost | measures the amount of resources consumed by deploying, operating and maintaining sensors. Also we have to measure how much the sensors interfire with the normal data flow of the system (indirect cost.) |

6.2.2. Detection

There are different perspectives over which we can try to capture the goodness of a detection mechanism.

A first metric is the individual strength. We consider two aspects: the speed of the detection, and the accuracy of the detection.

Detection time is the delay between time \(t_0\) (at which a compromised computer sends it first scan packet), and at time \(t\) (at which a scan packet is observed by the instrument).

Some accuracy metrics can be: true-positive, false-positive, true-negative, false-negative, ROC curve, IDOC Curve and cost.

A second metric is the relative strength, that evaluates a detection mechanism by comparing it to another detection tool. Of course the comparison must be performed fairly. We say that a defense tool does not offer any extra strength if it cannot detect any attack undetected by other defense tools.

The third metric is the collective metric, that measures the collective strength of all the detection mechanism put in place.

6.3. Proactive Defenses

This category provides only two classes of metrics:

- Address space layout randomization metrics, and

- Moving target defense

6.4. Strength of Overall Defenses

We try to evaluate the robustness of the overall defense mechanism. First we evaluate ow much we are able to contain a specific kind of attack: penetration resistance is measured by running a penetration test to estimate the level of effort required for a red team to penetrate into a system.

Network diversity measures the least, or average effort an attacker must make to compromise a target entity based on the causal relationships between resource types to be considered. This mechanism is effective only if is done with a good criteria, replication is not enough, because simply cloning a system will increase performance, but not security. So all the instances must be functional components that will delay the attack. Network diversity must be taken into account during a design phase.

7. Attack Metrics

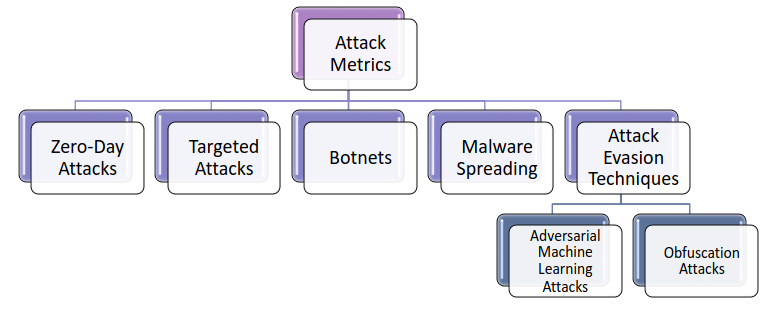

The attack metrics are strictly related to the specific type of attack, up to now we have the following taxonomy:

7.1. Zero-Day Attacks

We can try to measure two main elements: the lifetime and the victims of a zero-day attack. The first measures the period of time between when an attack was launched, and when the corresponding vulnerability is disclosed to the public; the latter measures the number of computers compromised (or that may be compromised by it).

7.2. Targeted Attacks

This metrics measures the success of APTs. The success of targeted attacks often depends on the delivery of malware, and the tactics used to lure a target to open a malicious email attachments.

We define the threat index metric as \(\alpha \times \beta\), where \(\alpha\) is the sophistication of the social engineering tactic used, and \(\beta\) is the technical sophistication of the malware (or attack vector) used.

7.3. Botnets

Botnets have been studied for a longer period of time, for this reason different metrics are present in literature.

| Metric | Description |

|---|---|

| Botnet size | the cardinality of the botnet |

| Network Bandwidth | the network bandwidth that a botnet can use to launch DDoS |

| Botnet efficiency | It measures the capability of communicating with C2C |

| Botnet Robustness |

7.4. Malware Spreading

The metric used is the infection rate metric that indicates the average number of vulnerable computers infected per time unit.

7.5. Adversarial Machine

In adversarial machine learning, attackers can manipulate some features that are used, changing the model. The attacker may act at different level:

- At training level, compromising training data

- Introducing noise into the algorithm, or changing parameters of the latter.

The strength of the attack can be measured by the increased level of false-positive, and the increased level of false positive rates. (How much the performance of the learning algorithm are degraded)

7.6. Obfuscation Attacks

From the point of view of obfuscation attacks there are two metrics that can be used:

- Obfuscation prevalence metric. How much are we able to obfuscate particular behaviors.

- Structural complexity metric. Measures the runtime complexity of packers in terms of granularity.

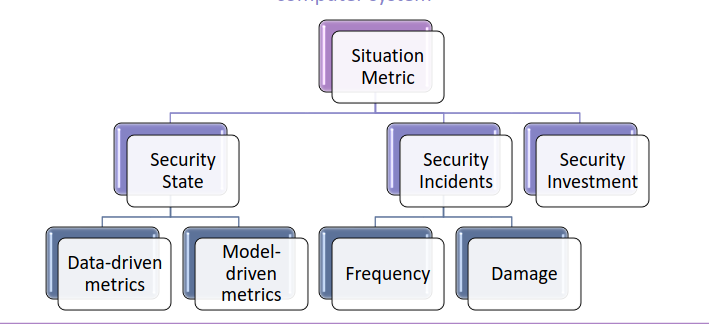

8. Situation Metric

A situation metric is mostly used at the tactical and strategical level. It provides an overview of the state of the system. There are three main Situation Metrics:

- Security State

- Security Incidents. More we are prone to incidents, more countermeasures the organization must take.

Security Investments. Indicates if the organization needs to invest more security wise.

8.1. Data Driven metrics

| Metric | Description |

|---|---|

| Network Maliciousness | The fraction of the network that is typically contacted by blacklisted IP addresses. It is important to consider with regards of the timeless aspect of the blacklisting metric, and to estimate the potential entry points. Also it is interesting because sometimes, for many different reasons, not all the IP addresses may be blacklisted (honeypots) |

| Rogue network | this metric tries to study the portion of the network used as target for phishing attack, it is useful during forensics investigation after a phishing campaign. If the portion of the network is large, then there is an awareness problem |

| ISP badness | It quantify the level of spam coming from a specific autonomous system. It used for identifying countermeasures, and to control if the network is not managed correctly on the boundary. |

| Control-plan reputation | In this case we try to measure and verify the degree of strength of the attacker with respect to the control plane information deployed. How good was Access Control Mechanism, Firewall rules, and more. |

| Cybersecurity posture | It is an overall measure of how cybersecurity is addressed inside the organization. NIST framework can be used, to know which and how much categories are covered. |

8.2. Model Driven Metrics

Here the idea is that that we abstract the system using a model, and then evaluate the state of the system given the model. There exists two metrics that can be computed from the Attack Graph. Identifying hosts, and communication links, mapping compromised hosts and links, and then counting them. The second metric models the system as a Markov Chain, representing the number of actual compromised hosts at time \(t_k\), and estimating the probability of certain number of hosts are compromised at time \(t_i\).

8.3. Security Incidents

First of all we can try to evaluate the frequency of security incidents.

| Metric | Description |

|---|---|

| Encounter rate | |

| Incident rate | Ratio of detected incidents |

| Blocking rate | Ratio of blocked incidents notified in a specific period of time |

| Breach frequency | Breach Size |

| Time between incidents | |

| Time to first compromise | Estimation of the time that should elapse before the first compromise of the system |

8.4. Damage of Security Incidents

Two following metrics can be used:

- Delay in incident reaction, measures the time between the occurrence an detection

- Cost of incidents, including both direct and indirect costs.

Those two metrics must be use together to provide a full picture of the incident itself.

8.5. Security Investment

The two metrics of the security investment comes from a business perspective. The first index used is the security spending, that indicates the fraction of the budget allocated to ICT department spent to address security issues. Try to balance the budget.

Security Budget Allocation estimates how the security budget is allocated to various activities and resources. Security has many different phases to consider: investment on technology, training of personnel, create new procedures, and so on.

Return on security investment is the last metric, it is a common index used to evaluate the financial aspect of a company: quantify the benefit of the investment did. Since security is not a real investment, the ROSI metric actually measures the reduction of the loss caused by incompetent security. This index is very useful at the strategical management, giving fundamental information on how to modify the current investment strategy.