Forensics Analysis

Table of Contents

1. MAIF 07

- Class: Malware Analysis and Incident Forencsis

- Topic: Forensics

- Reference: The art of memory forensics.

2. Incident Response versus Forensic Analysis

Forensic analysis, entails different kinds of activities: evidence acquisition, log and timeline acquisition, media analysis, string search, data recovery and malware analysis.

As soon as an attack is discovered, people will start to behave differently, so it is a good idea to have a predefined plan of action, that can be performed without thinking to much. When an incident happens, every single person is able to play his role without losing time.

Managing cybersecurity incidents must done quickly to mitigate and recover, a typical incident response plan provide indications for each meta step:

- Preparation

- Identification

- Containment

- Eradication

- Recovery

- Lesson Learned

After a cybersecurity incident, it’s fundamental to learn from the incident itself to improve the process; forensics analysis is the process used to extract useful information, and potentially to persecute the responsible. It starts immediately after the identification phase, it ends typically after the eradication phase, after collecting the last bits of information.

3. Digital forensics

With digital forensic we refer to the set of actions that include the acquisition of info, their preservation and their analysis, guaranteeing the fact that the evidences gathered can be used in a trial.

For this reason data has to be analyzed in such a way to don’t reduce its suitability for admission as a fact in a trial.

We have to put in place a set of standard practice to reduce at minimum the probability to corrupt the evidence just by the actions performed to collect the evidences. Collecting data to treat it as forensics digital evidence, implies the fact that the analyst must be able to demonstrate that during the handling of the information, its real nature was not impacted.

Whatever has a digital form, can be considered as a digital evidence, in the real world it can be a log line, the content of a database record, an email. Testimony is presented to establish the process used to identify, collect, preserve, transport store, analyze, interpret, and reconstruct the information contained in the exhibits; if all the process is sound it can be taken into account.

Notice that correctly managing the process is important to make sure that, if challenged, the analyst can provide a right explanation and solve doubts during the trial.

More broadly speaking, today the concept of forensics analysis is also used to refer to post-mortem analysis of compromised asset, analyzing:

- Hosts infected by malware

- Servers compromised by attackers

- Network traces containing suspicious massages

In this case, when time is a concern, the usage of forensics analysis procedures inside an incident management procedure in loose, but a log of action must be taken to make sure that the result of the analysis is actually sound.

4. Digital forensics process

A digital forensics process is composed by different steps.

4.1. Identification

5. Memory Forensics

Memory forensics today is important for different reasons. First of all when a system is power on it is much easier to acquire data from an encrypted disk because the key to decrypt data is stored in memory. Another important aspect that is related to malware, is the fact that memory forensics gives the possibility to inspect data only available in memory, for example in case of disk-less malware, that target machines that are not supposed to be rebooted frequently.

Same goes for rootkits, that works at kernel level, and so they may easily hide their presence to other user space software, while a full dump of the memory will provide information of what the kernel was doing. Another important aspect is related to data created in the past, looking inside the memory of a running computer it is possible to find piece of quite old data. That’s because that when a process get rid of a data structure present in memory that section will be set as free, but not overwritten.

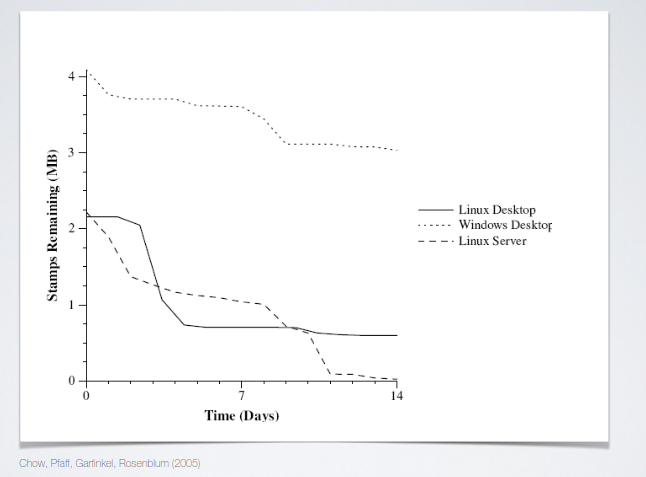

The graph represent the outcome of an experiment, in which three machines with three OSs were used with the same usage pattern. After a while they grabbed a snapshot of the memory space of the machine to check what was available.

The lifetime of data stored in memory depends strongly on the operating system. In the windows machine 75% of the original data was present after 14 days.

For example, after identifying a suspicious SSL packet on the network is possible to dump the memory of the host that sent that packet, extract the SSL key, and inspect the content of the packet.

There are different goals of memory forensics, first of all is possible to identify who is doing what. While today we have tools, typically offered from the OS to persist delete data on the persistent storage, but this kind of mechanisms are hardly available at memory level. The virtual memory environment decouples the vision of memory of a process to the physical memory view. There is no practical way to guarantee that sensible data in memory are fully deleted; it is only possible to follow guidelines to minimize the amount of time that sensible data in on memory.

Also it enables the analyst to acquire another piece of the puzzle, and also because most applications that handle sensitive data weren’t specifically designed to deal with sensitive data (for example word processors).

5.1. Persistence of memory

Data can persist through reboots of the machine. In a matter of few millisecond data in memory is lost, but some techniques can increase the amount of time of life of the machine (cold boot attacks).

In the past memory checks were performed on boot to erase all RAm and check its consistent state, but this practice is quite abandoned today to speed-up the boot process, and because RAM modules are more reliable.

There are some constraints when dealing with live forensics: first of all the impact of the analysis must be minimized, memory dumps are intrusive, and also the time variable is crucial given that also physical access to the machine is needed.

5.2. Approaches of Memory Forensics

There are two main approaches of Memory Forensics: Live response, and memory analysis. The first is best suited when time is fundamental, it consists in acquisition and analysis in one step, the tools used are obtrusive. The latter focuses on gathering best evidences, it decouples the acquisition and analysis steps, the analysis is performed on the memory dump, minimizing the footprint of the analysis (cold copy of the memory); the analysis is repeatable, the acquisition is NOT repeatable.

5.3. Basic recap of Memory

The organization of memory is architecture depends, and different architectures uses different memory addressing techniques, but all architectures implement virtual addressing mechanisms (not true on some embedded devices).

Virtual Memory allows the OS to expose to the processes a uniform, flat a globally available vision of the memory, the OS will map the virtual address space to the physical memory locations (Virtual to Physical translation). The memory allocation unit makes available to the OS data structures to perform the translation, with hardwar support to make it as fast as possible.

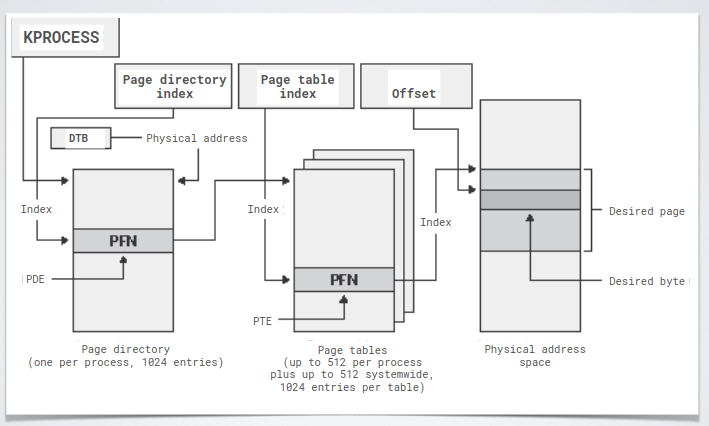

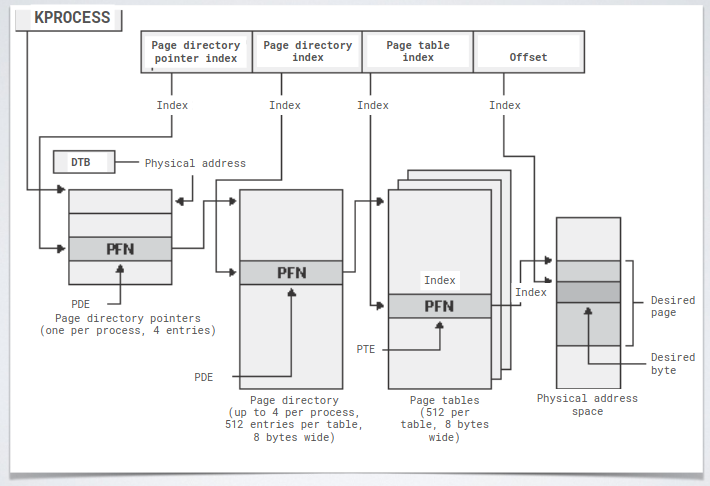

The physical memory is divided in, at least, two sub-parts: kernel space and user space. The addresses in x86 architecture are divided in three sections: offset and PDI and PTI.

Bits in the section are used to translate the virtual address to the physical location:

PDEs and PTEs include flags for assessing the validity of a virtual memory page. Then there was introduced an extension during the transition from 32bits and 64bits architectures. The purpose was to allow a further subdivision (another level of indirection) to make larger the addressing space.

Of course VtoP operations are time consuming, so TLBs hold caches of address conversion for processes with the current context.

Processes use the physical memory though the virtual addressing space provided by the OS, that means that even if a process sees its memory as a continuous space, in reality at the physical level data is not contiguous. Data related to the same process is spread all over the physical process.

5.4. Acquisition Phase

It consists on the acquisition of the full memory of the system. To minimize the impact on the system some guidelines can be followed Here. Modern OSs are designed to isolate memory associated to different processes and of different users, those features work against the analyst, a low level interactions are required to perform a full memory dump.

interacting with an un-trusted system, the adversary may try to hide is presence. In case of user level malware the amount of tricks that the malware may use is quite limited, and can be easily spotted by using clean tools on external media. In case of root level malware, the level of trickery may be more deep, the malware may change everything, also at the memory level, during analysis.

Hardware based acquisition is possible, it consists on installing hardware modules on the PCI slot of the un-trusted machine, those modules perform a bit-by-bit copy of the memory (no interaction with the OS). This kind of hardware is expensive, and its usage is based on a strong assumption: you have to equip the machine with the module before the compromission takes place.

Software based acquisition consist in using features exposed by the operating system. Clearly software based acquisition tools can be launched only with administrator privileges, and are visible to an adversary. For example the malware can stop when a memory dump is in place, or it can cover its execution by zeroing part of its memory, or in case of rootkits, the information present in memory are fully substituted with crafted info.

A last approach is represented by using Virtual Machines snapshots, but of course the machine under analysis must be a VM (strong assumption), and is quite transparent to the OS. Other sources of memory dumps are hibernation files, and pages swapped from memory to disk (pagefiles); the content of pagefiles is just a collection of pages, they may contain old data.

5.5. Memory Analysis info

A memory dump contains everything: data related to processes currently running, open network connection, open files, encryption keys for whole disk encryption schemes, and copies of volatile only malware.

Memory analysis may be performed by simply looking at the whole memory dump as a plain sequence of bytes, looking for patterns, or elements of known data structures (TCP protocols, elements of the OS), it is possible to extract strings, multimedia files and so on.

This kind of analysis tends to produce a lot of noise, because the tool used checks if a certain amount of contiguous bytes has a given pattern, but the fragmentation of memory pages may increase the difficulty of the search itself. Nevertheless this approach is useful when looking for stale data. On the other hand, when looking for data associated with running processes, the memory is analyzed rebuilding the state of the OS when the analysis was made: targeted search.

Volatility is a free tool, that enables to perform memory analysis, it is really good to perform structured analysis. It identify the main kernel data structures, and rebuild the status of the OS. Volatily also tracks the parent->children relationships among processes.

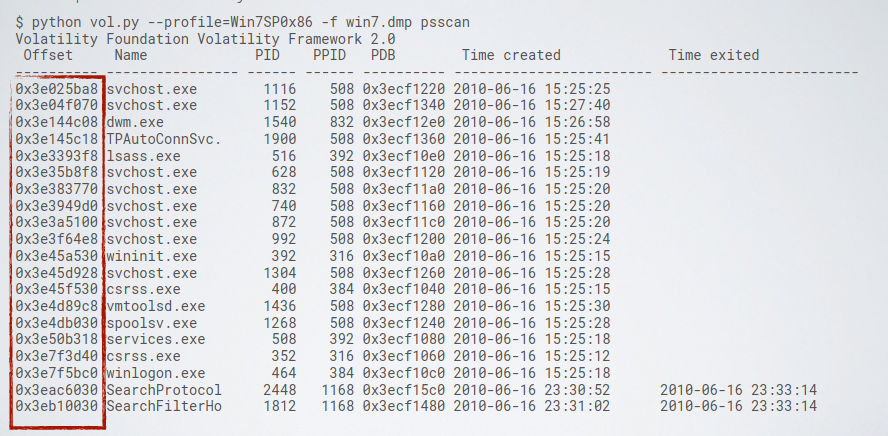

They adversary may hide its presence by altering the kernel level data structures by removing its process from the list, in this case scanning the memory for process artifacts may return better results. Volatility can use the psscan command, to allow the analyst to identify data structure related to processes detached from the main table, and find control blocks of processes that are terminated:

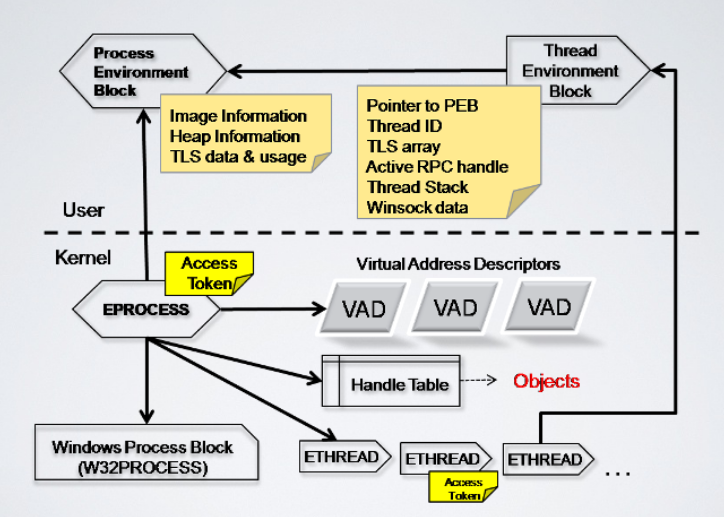

The EPROCESS data structure, is a kernel level data structure (double linked lists), that contains the process environment block that is a pointer to a user level data structure containing the info about the process.

Volatility pslist follows the EPROCESS list data structure to rebuild the list of running processes, furthermore, some malware remove their presence to the EPROCESS data structure to hide. On the other hand the psscan looks for sequences of bytes that satisfy a block of EPROCESS, even if it is not linked to the list.

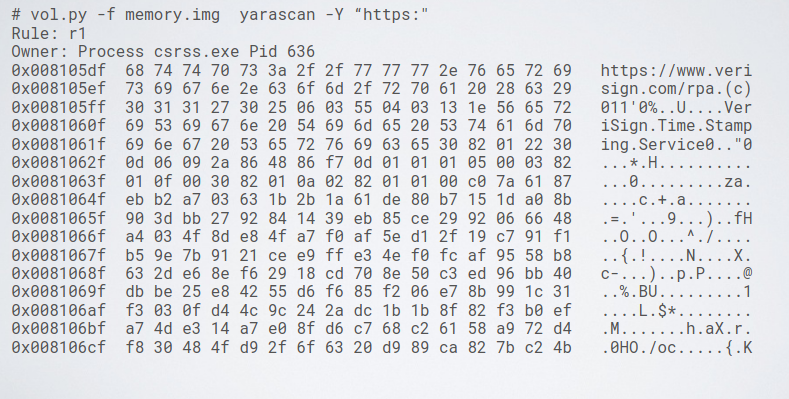

It is possible to use YARA in combination to volatility to perform a low level analysis.

The YARA rules can be applied also to a single process, using its PID.

5.6. Kernel Debugging DS

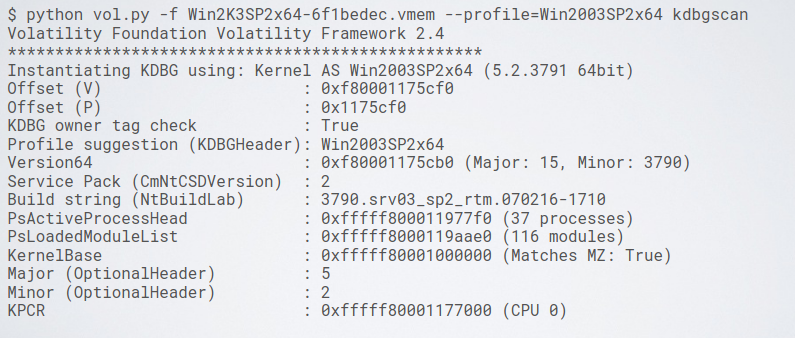

The windows process block, called also KDBG it’s linked to the list of running processes, the pslist command, use the KDBG data structure and follows the PsActiveProcessHead to rebuild the list of running processes. The same data structure contains also pointers to running drivers, or modules.

Sometimes microsoft slightly modify the structure of the KDBG, its structure can be used by volatility to infer the version of the OS:

5.7. Interactive Volatile Session

Volatility can be used interactively, it spawn a python shell mapping the memory data structures on python data structures.